Extended abstract on Bootstrapping Indoor Semantic Digital Twins from 2D Video accepted at German Robotics Conference 2026

Our extended abstract “Bootstrapping Indoor Semantic Digital Twins from 2D Video” has been accepted to the 2nd German Robotics Conference (GRC).

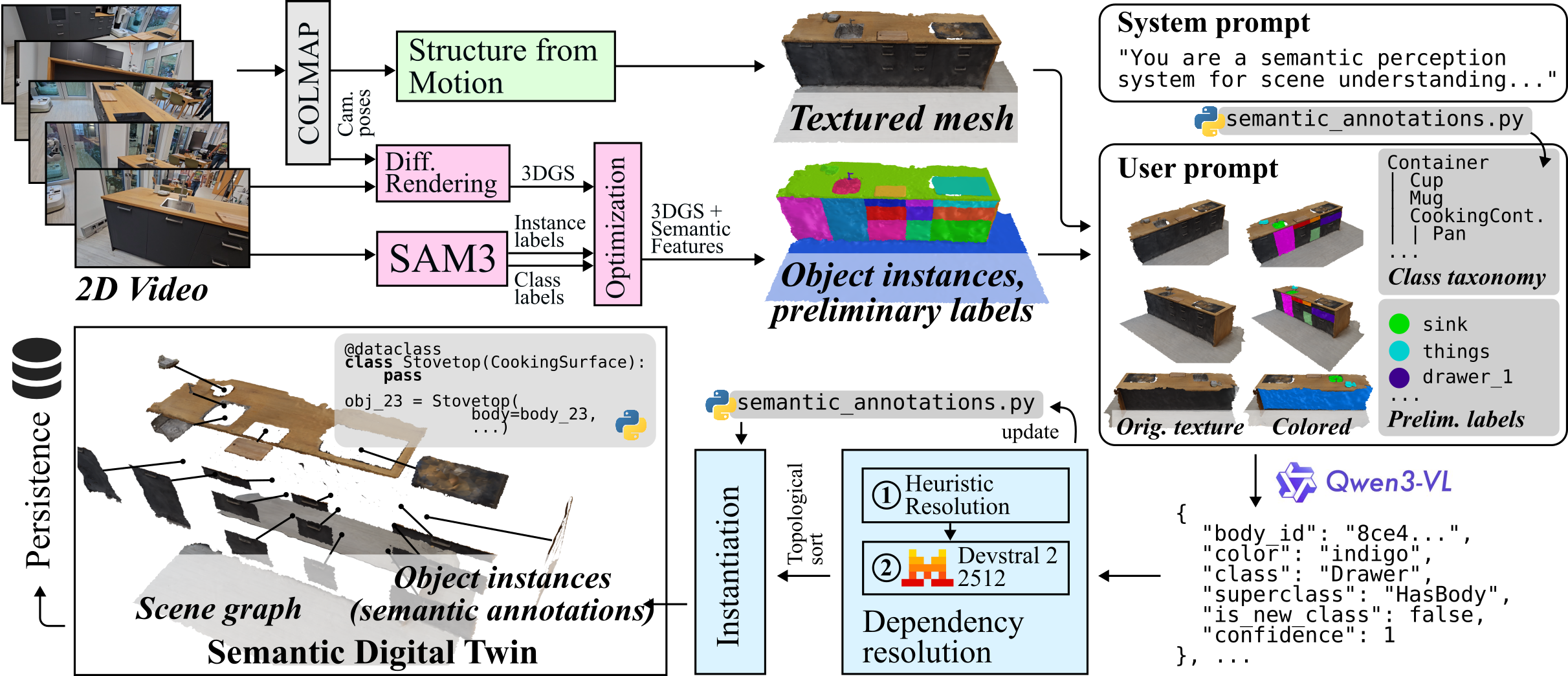

In this abstract, we explore first steps toward the vision of creating full-fledged semantic digital twins purely from 2D video, without requiring additional manual modeling or annotation. We want to enable people to point their phone camera at e.g. their living room, and automatically obtain a semantically annotated digital reconstruction of the room. The resulting digital twins enable robots to understand their environment, plan actions and control their movements in ways that are robust and interpretable to human users.

Our system reconstructs 3D object geometry via Gaussian Splatting, and segments environment objects using SAM3. It automatically performs open-vocabulary classification, conditioned on a taxonomy of prior knowledge, and persists the resulting semantic digital twin to a queryable database. When the existing semantic categories are insufficient, the VLM dynamically proposes novel classes and automatically extends the class taxonomy. Because the class taxonomy is realized as Python data structures, the learning system effectively rewrites and evolves its own code at runtime.

Joint work with Luca Krohm, Patrick Mania, Maciej Stefanczyk, Artur Wilkowski, and Michael Beetz.

The source code is available on GitHub.